Bus Mixer Signal Flow

I promised to write about my new setup once it is in a working state. My goal was to to replace the analog 19″ mixer rack (see my post on Loopdeck Embedded Linux) with a digital mixer that is much smaller in size and offers total recall. The problem with all products I could find on the market was either their size or their lack of auxes and busses. I don’t have that many channels in my setup, but I need to route all these microphone and synthesizer signals to many different locations at the same time: sum compressor, front of house subgroups, looper, reverb, delay effects, my in-ear monitoring. So here is my DIY solution: a portable digital mixing desk that utilizes a firewire audio interface and a small MIDI fader controller.

Why is that something special? Can’t we just do that with some busses in Ableton Live?

- First, I am very crucial about latency. Of course, for synth sounds I wouldn’t notice 6-12ms of delay, but the mic signals from drums and vocals that go directly into my monitoring would feel rather strange if they are fed through a software and therefore are not in real time.

- Second, independence and stability of the system is very important. This mixer should stay in its state when I load a new song or fine-tune some settings during soundcheck. Also if a software process crashes, there still should be sound, and not silence – or worse, noise. Moreover, as least the mics and hardware synths should be audible even when the computer is off.

- And third, but not last: the interface to this mixer should be very simple. Most of the settings I need are hard wired and I am only controlling volumes, sends and bus assignments during a live show. But still, feedback is very important – LEDs and motor faders help me to overview the current state without even the need of having a screen connected.

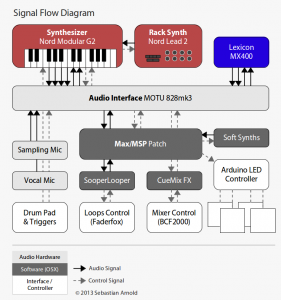

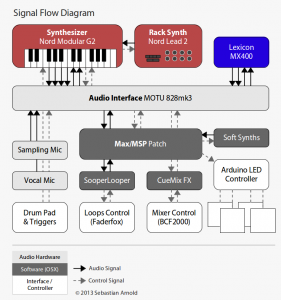

The system that I realized is based on a Mac Mini with a connected MOTU 828mk3 audio interface, all built into a 5HE rack. This firewire device has many in- and output options and includes CueMix FX, which can do no-latency audio handling in standalone mode, even with EQs and compressors built in. This solution could of course also be adapted to make use of similar functions in the TotalMix FX software of the RME Fireface interface. CueMix FX is controlled via Open Sound Control (OSC) from a big Max patch that I wrote in the last months. The graphical window of this patch is my only interface to the computer and it is displayed on a 8″ Faytech Touch Screen.

Putting things together

Max/MSP Bus Mixer

Screen and Faders

In the screenshot above, you can see the channels from left to right: Drum Mic, Wet Reverb return, Vocal Mic, some channels from the Nord Modular G2, E-Piano VSTs, SooperLooper, Lexicon FX return. I included some modules that connect other software: I use Fluidsynth, Lounge Lizard EP4 and Pianoteq 4 as permanently active soft synths. A patch management module is used for recall of aux send assignments and synth patches and includes clock and set timer :-) The loop module is a OSC bridge to SooperLooper and there also is this light controller, which interfaces an Arduino for some RGB LED light effects. More on that later, after the Tour:

(Update) Because many of you are interested, here is an example Max patch that connects to CueMixFX using OSC: Download CueMixFX_Example.maxpat. You will need to install the osctools External by Remy Muller as well.